Configuration objects inherit from PretrainedConfig and can be utilized to control the design outputs. study the

MoE Mamba showcases improved effectiveness and performance by combining selective state Area modeling with skilled-based mostly processing, offering a promising avenue for long run investigation in scaling SSMs to take care of tens of billions of parameters. The design's design consists of alternating Mamba and MoE levels, allowing for it to effectively integrate your complete sequence context and apply one of the most pertinent skilled for every token.[nine][10]

If handed alongside, the design employs the prior state in many of the blocks (which is able to give the output for your

efficacy: /ˈefəkəsi/ context window: get more info the utmost sequence duration that a transformer can process at any given time

Even though the recipe for ahead move really should be outlined inside of this function, one particular need to call the Module

Two implementations cohabit: 1 is optimized and takes advantage of rapidly cuda kernels, although one other a single is naive but can operate on any gadget!

Structured condition Area sequence designs (S4) are a latest course of sequence styles for deep Studying which are broadly relevant to RNNs, and CNNs, and classical point out Place products.

We are excited about the broad purposes of selective condition House designs to build foundation designs for various domains, specifically in rising modalities requiring prolonged context such as genomics, audio, and movie.

occasion afterwards instead of this given that the former requires treatment of running the pre and put up processing methods although

It was firm that her motive for murder was income, due to the fact she experienced taken out, and gathered on, existence insurance plan insurance policies for each of her dead husbands.

View PDF HTML (experimental) Abstract:condition-Room types (SSMs) have a short while ago shown competitive general performance to transformers at large-scale language modeling benchmarks although achieving linear time and memory complexity for a function of sequence duration. Mamba, a not too long ago introduced SSM product, reveals remarkable overall performance in both equally language modeling and extended sequence processing tasks. concurrently, mixture-of-specialist (MoE) versions have revealed impressive performance even though appreciably decreasing the compute and latency charges of inference on the expense of a larger memory footprint. With this paper, we existing BlackMamba, a novel architecture that combines the Mamba SSM with MoE to acquire the many benefits of the two.

No Acknowledgement segment: I certify that there's no acknowledgement segment in this submission for double blind overview.

Mamba is a whole new point out Place product architecture that rivals the basic Transformers. It is based at stake of progress on structured state House models, having an productive hardware-conscious structure and implementation during the spirit of FlashAttention.

The MAMBA design transformer which has a language modeling head on best (linear layer with weights tied to the input

This product is a completely new paradigm architecture depending on state-Room-designs. You can read more details on the instinct guiding these listed here.

Alisan Porter Then & Now!

Alisan Porter Then & Now! Kelly Le Brock Then & Now!

Kelly Le Brock Then & Now! Matilda Ledger Then & Now!

Matilda Ledger Then & Now! Raquel Welch Then & Now!

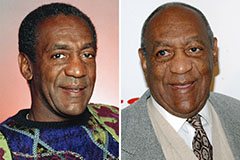

Raquel Welch Then & Now! Bill Cosby Then & Now!

Bill Cosby Then & Now!